Deepfakes in the World of AI

Deepfakes and AI content are appearing everywhere online. You might have seen videos of people saying things they never said or images that look real but aren’t. These can be funny or interesting, but they can also be misleading and cause problems. As AI tools become easier to use, anyone can make realistic images, videos, and audio.

What is a Deepfake and where do we find them?

Deepfakes are shared on social media, google and in ads. People may believe them because they look real and this can cause harm also making people trust things that are not true. AI content is used more in marketing now, which can be good, but it also has risks. If people feel tricked, brands may lose trust or credibility.

Many people don’t realise when content is made or changed by AI. They may think someone said something or endorsed a product when they didn’t. They might believe a story that is made up. This is why marketers need to be careful.

Why It Matters

Marketers have a duty to be honest. Trust is important.

Deepfakes can go too far, especially when copying real people however even if it’s legal, it may not be right. AI will keep changing how content is made and marketers should use it in a safe and fair way.

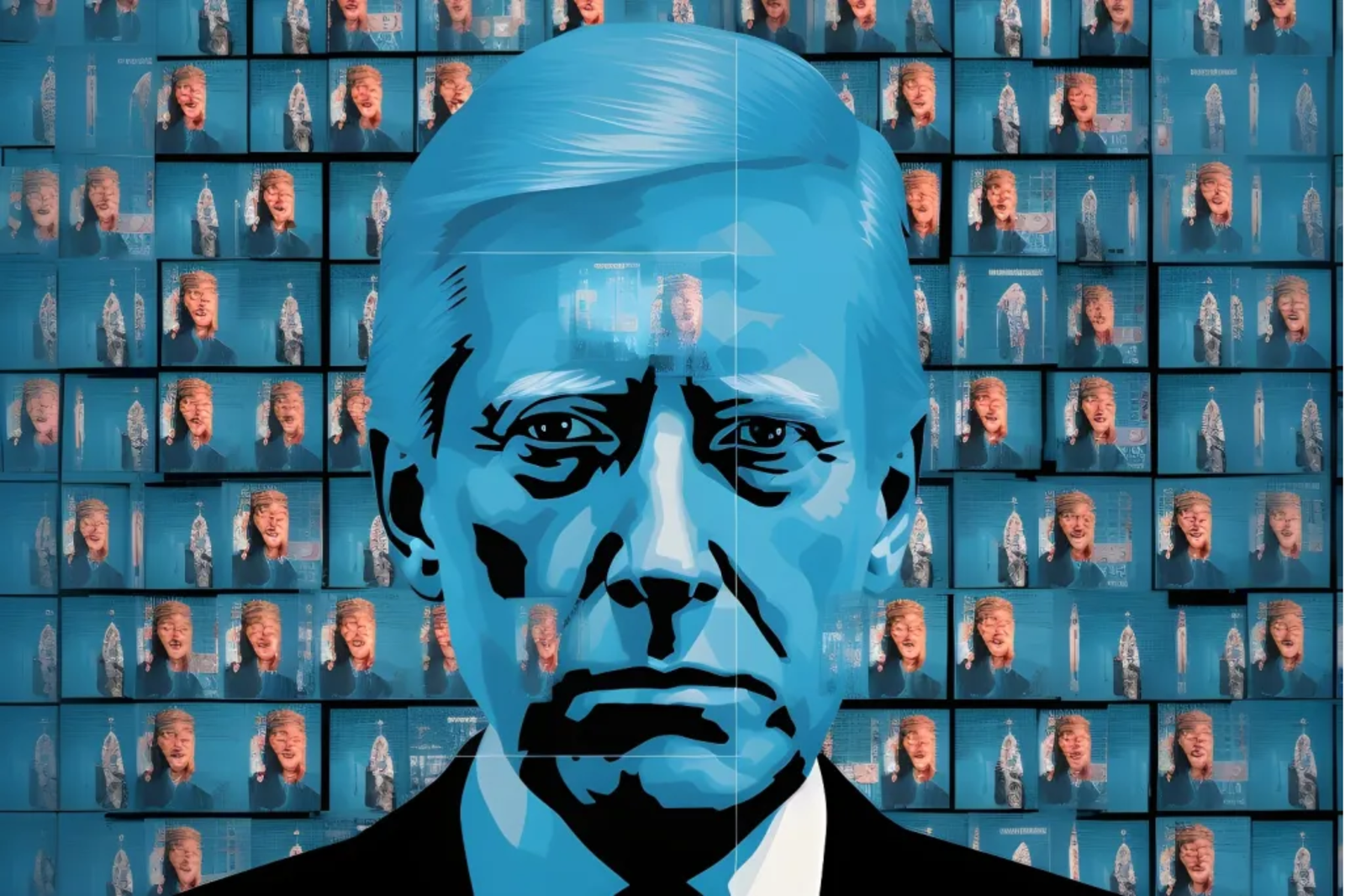

Deepfakes can be used almost like a mask – creating a template for someone’s facial expressions and even stealing their voice. The person creating the deepfake can hide behind a more friendly or recognisable face. For example below you can see the creator has used a recognisable face which can cause chains of dangerous mis-information.

The digital world is more complicated now meaning Safeguarding matters a lot. Brands should think about the effects of their content, not just how cool it looks particularly using AI to generate their content.

To find out more check out our downloadable resource that shows you more tell-signs of how to spot a deep fake.